Research interests

My research is concerned with structure-preserving machine learning [1], [2], with a particular focus on deep learning (DL) methods, i.e. methods using deep neural networks (NNs). Inspired by the successes of structure-preserving numerical methods (e.g., symplectic integrators in long-time simulations of physical systems), the goal is to design machine learning (ML) methods that provably satisfy desirable structural constraints and to better understand existing ML methods by connecting them to numerical analysis. Important examples of structural constraints that we would like to incorporate into ML methods are symmetries [3], [4], stability (for example in the form of Lipschitz constraints) [1], [5], [6], [7], [8] and conserved quantities [5], [6]. Among the advantages of these structural constraints are that they can reduce the need for training data and that they can be used to ensure that a model can confidently be used in downstream tasks by giving convergence guarantees. These advantages are of particular importance in real-world, safety-critical, applications such as medical imaging, where data is scarce and where there is a natural skepticism of the black-box nature of generic ML methods.

I first started working in this area a few years ago, with my collaborators and I writing a paper [2], which received the John Ockendon Prize from the European Journal of Applied Mathematics in 2022. It remains a highly active field of research: my collaborators and I prepared a proposal for an MSCA Staff Exchange programme in this direction, REMODEL, which started in early 2024.

Structure-preserving ML for ill-posed inverse problems in imaging

One of my main application areas of interest is inverse problems. Important examples include the reconstruction problems for magnetic resonance imaging (MRI) and computed tomography (CT). The goal is to estimate an image \(u\) from measurements \(y\), where \(u\) and \(y\) are related by \(y = \mathfrak{N}(A(u))\) for some forward operator \(A\) and noise-generating process \(\mathfrak{N}\), both of which are generally known. In MRI, for example, the forward operator can be modelled well as a subsampled Fourier transform, \(A = S \mathcal F\), while in CT, it is modelled as a Radon transform. Generally, the inversion is ill-posed, meaning that a naïve inversion is not defined or is unstable. As a result, it is necessary to regularise the inversion. A well-established regularisation approach is variational regularisation [9]: the image is reconstructed from measurements \(y\) by solving a minimisation problem such as \[\label{prob:varreg} \hat u = \mathop{\mathrm{argmin}}_u\, d(A(u), y) + \alpha J(u) \tag{1},\] where the first term measures the data discrepancy and the second term (the regulariser) measures compliance with prior information. By incorporating this prior information, we stabilise the inversion, at the cost of introducing some bias. This approach is supported by a large body of theory [9], but its reconstruction fidelity is generally held back by the fact that \(J\) is typically hand-crafted, and manual tuning is used to improve reconstructions. A prototypical example of a hand-crafted prior is the total variation (TV) prior which favours piecewise constant signals [10]. Significant gains in reconstruction quality can be obtained by allowing parts of this optimisation problem to be tuned using data.

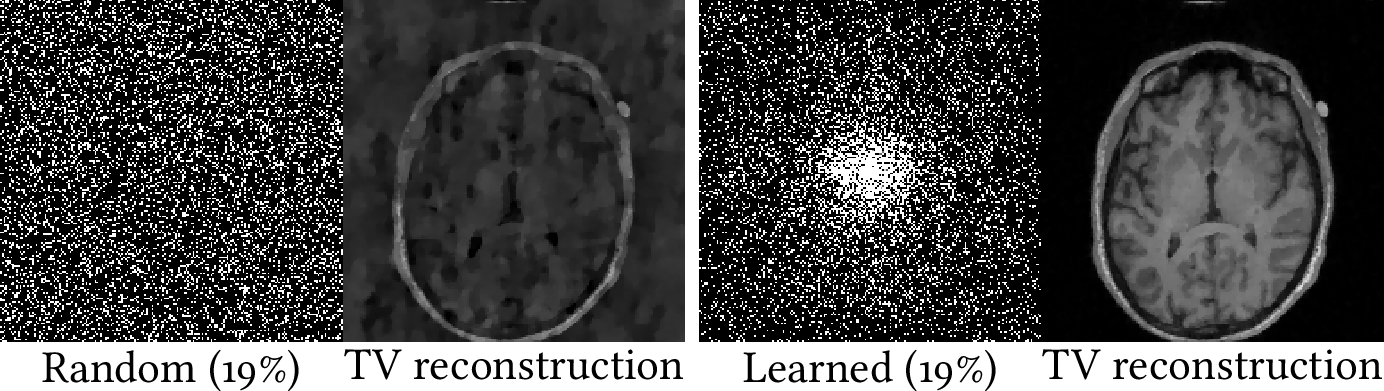

The most natural way to do so is through bi-level learning, where parts of the variational problem are parametrised and the corresponding parameters are tuned for optimal reconstruction of a set of training images: as an example, in [11] I proposed such an approach to jointly learning a sparse sampling pattern (\(S\) in \(A = S\mathcal F\)) for MRI and the parameters of a variational method. Increased sparsity of the sampling pattern corresponds to shorter acquisition times, which is desirable as the MRI acquisition experience is uncomfortable for patients. From the theory of compressed sensing [12], it is well-known that it is possible to acquire sparse measurements and reconstruct good images using variational regularisation, but generic sparse patterns do not work well, as seen in the figure above. On the other hand, the figure shows a learned pattern achieving the same sparsity but with much better reconstructions.

In the past few years, alternative data-driven approaches to solving inverse problems have been developed [13], most of them using NNs, and many of them based on or inspired by variational regularisation. The most well-known of these include learned regularisers, unrolled algorithms and plug-and-play (PnP). By incorporating knowledge about the forward model, these methods can be thought of as exploring the spectrum between the rigidity of variational methods with hand-crafted priors on the one hand and the flexibility of black-box DL approaches to inverse problems on the other hand. These methods allow for large improvements in reconstruction quality, and are often cheaper to compute than variational methods.

Group-equivariant NNs for inverse problems

Data-driven methods can encode more realistic prior information about natural images than hand-crafted priors encode, but this comes at the cost of needing increasingly larger amounts of training data as model sizes increase. Further prior information can be incorporated into data-driven approaches to inverse problems to decrease the amount of training data that is needed. An important way in which this can be done is by considering the symmetries of the data, as we did in [3], [4]: often, we want data points to be considered equivalent if they can be transformed into each other by a symmetry transformation, and we want our method to respect this symmetry. This is formalised in the concept of equivariance, with an operator being equivariant if its outputs predictably change when its inputs change under a symmetry transformation. As is shown in the figure below, there are complications when applying this concept to inverse problems: the forward operator may be incompatible with the data symmetries, resulting in a reconstruction pipeline that is not equivariant. However, variational methods (and methods inspired by them) decouple the prior information from the measurements, so we can take advantage of data symmetries in the prior information, for example by modelling the denoisers in unrolled algorithms and PnP as equivariant NNs. In [3], I showed that incorporating this information in data-driven approaches to inverse problems enables more efficient use of training data and increases robustness to transformations that leave images in orientations not seen during training.

PnP methods as convergent regularisation

While data-driven approaches to inverse problems have been shown to be highly effective from a practical perspective, they are lacking in theoretical support when compared to variational methods. In particular, there is no general theory of convergent regularisation for these methods. In fact, it is not even clear how one should properly make sense of this concept for data-driven methods, as they are usually trained at a fixed noise level, with no consideration for what should happen in the asymptotic setting as the noise level vanishes. For PnP methods, this means that we train a denoiser at a fixed noise level and it is not obvious how one should adapt the denoiser to vary its regularisation strength. In [14], my collaborators and I reviewed the theory of convergent regularisation and background on PnP methods, before introducing a way to achieve convergent regularisation with PnP methods with linear denoisers. In this setting, a novel spectral filtering operation applied to the denoiser (as opposed to the forward operator as in usual spectral approaches to inverse problems) is demonstrated to give a control of the regularisation strength, resulting in convergent regularisation.

Designing robust NNs using connections to dynamical systems

As described above, it is highly desirable from the perspective of inverse problems to design methods that can harness the flexibility of NNs, while ensuring that the method is robust. Of course, this is of more general interest too, as state-of-the-art DL methods have been shown to be susceptible to adversarial attacks [15]: small perturbations that drastically alter the prediction of a method.

The framework of structure-preserving deep learning provides a natural way of dealing with such issues, by drawing connections to continuous-time dynamical systems for the design of stable NN architectures [2]: identify families of systems with the desired stability properties, flexibly parametrise these systems and numerically discretise them in a way that preserves the stability. For instance, if we are interested in designing non-expansive (1-Lipschitz) NNs, we can use that gradient flows in convex potentials are non-expansive. Furthermore, simple NNs with one hidden layer and tied weights are gradients of convex potentials if we use a continuous increasing activation function. Finally, we can approximate the corresponding flows while preserving the non-expansiveness, by using explicit time-stepping integrators while keeping track of the operator norms of the weights to inform the maximum allowable step size.

Applications to PnP methods for inverse problems

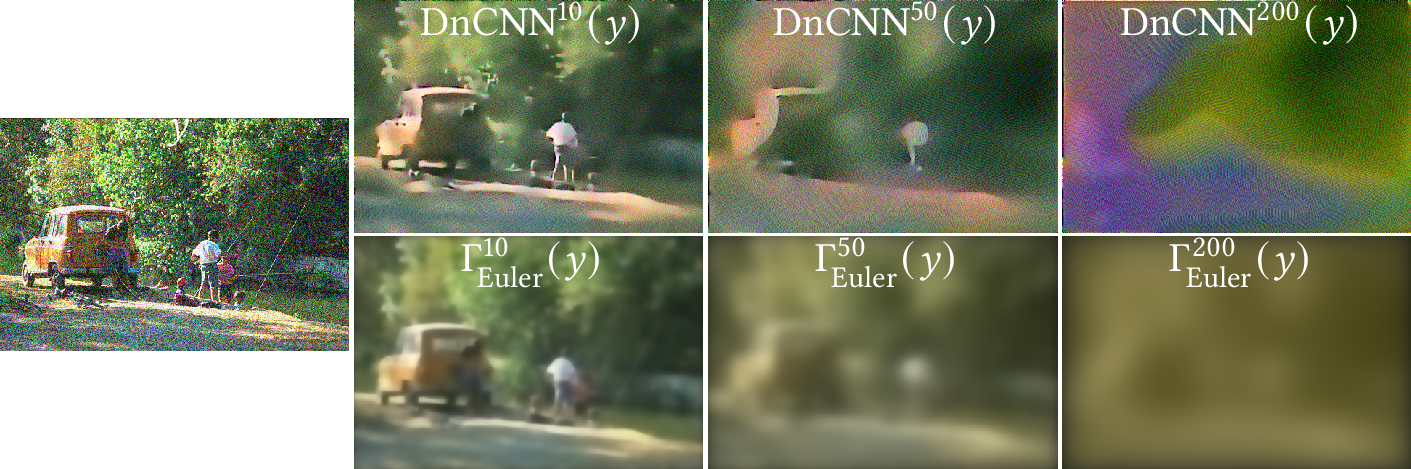

It turns out that this approach can in fact be adapted to give NNs that are provably averaged operators, which is beneficial for application in PnP methods, as it gives convergence of fixed-point iterations. Using an appropriate training procedure that respects the continuous-time dynamical system underlying the NN, I showed in [7] that it then becomes possible to train denoisers that perform on par with unconstrained DL denoisers but come with stability guarantees, as illustrated below.

Generalisations

If the desire is to design non-expansive NNs, it is not necessary to only compose non-expansive components: we can compose non-expansive and expansive components as long as their net effect is non-expansive. In [5], [6], we investigated the application of such an approach to adversarial robustness, observing that this gives more expressive networks (as evidenced by a higher clean accuracy) that are similarly robust to networks built using only non-expansive blocks. The aforementioned work was done in a Euclidean setting, but this is not an inherent limitation of the general approach. It is possible to extend it to general norms using the theory from [16]. We have applied this idea to adversarial robustness for graph data in [8]. Graph data comes with new challenges compared to image data: we need to take into account that an adversarial attacker may be able to change the connectivity of the graph, for example. Furthermore, it is natural to measure changes in the connectivity of the graph in terms of their sparsity, or relaxing this to an \(\ell^1\)-norm. Putting these components together, we design NNs based on a coupled dynamical system for the node features and the adjacency matrix, and find that the resulting networks provide significant gains in adversarial robustness compared to standard graph neural networks.

Ongoing work

In my research so far, I have mostly considered applications of structure-preserving machine learning to inverse problems, but I am currently working on applying these ideas to scientific machine learning more generally. In particular, I am extending these ideas to improve neural ODE and PDE solvers and I am keen to further expand my research interests in the direction of scientific machine learning.

As mentioned above, I have done a significant amount of work on designing robust NNs by taking inspiration from continuous-time dynamical systems, and I have recently been working on extending these approaches to graph data [8]. Since this requires processing both the adjacency matrix and node features, scaling this method up to operate on large-scale graphs is quite challenging and overcoming this issue is the topic of ongoing research. More generally, I am eager to explore the possibility of applying these ideas to other types of data, such as manifold-valued data, and to different notions of stability.

Finally, I remain very interested in data-driven approaches to inverse problems and continue to do research in this direction. As discussed above, there has been initial progress establishing theoretical results for data-driven methods to inverse problems (including the work [14] on achieving provably convergent regularisation using PnP methods), but this topic remains relatively undeveloped with opportunities to establish an appropriate, more comprehensive, convergence theory for these methods.

If you are interested in any of the topics mentioned above, don't hesitate to reach out to me at the following email address: